Dear Condor experts,

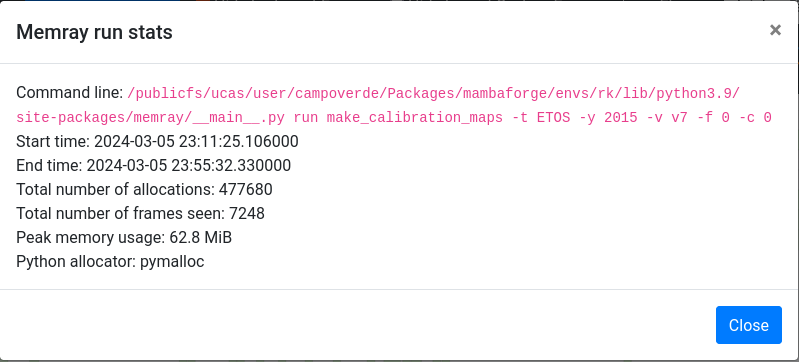

I am running a job that seems to be going beyond 8Gb in memory usage. However when I run it with memray (a memory profiler for python projects) I see that my memory usage is only 107Mb, as you can see in the screenshot below:

I have contacted the python mailing list:

and despite I try to turn off multithreading, I still get this high memory usage. Why is this happening and how should I fix it? I cannot use 16Gb of memory in my jobs, there are very few machines that have that much memory.

Cheers.

![[Computer Systems Lab]](http://www.cs.wisc.edu/pics/csl_logo.gif)